“Data Mining Diplomacy”: A Computational Analysis of the State Department’s Foreign Policy Files

Micki Kaufman, U.S. History

Scarcity of information is a common frustration for historians. This is especially true for researchers of antiquity, but not exclusively so. However, the opposite is also increasingly ever more the case as historians face a similarly daunting, converse problem. Google CEO Eric Schmidt has estimated that there have been more pages worth of information produced since 2005 than in the entire prior history of mankind, and 200 times this amount will be generated in 2015 alone. The rate of increase of cultural output is so staggering as to stymie any historian or diplomat who relies solely on analog tools to study it. Overwhelmed by this increasing deluge of available information, a vast field of haystacks within which they must locate the needles (and presumably, use them to knit together a valid historical interpretation), historians of the modern era have struggled to effectively understand big data archives. However, given the role Henry Kissinger played in first computerizing the State Department in the late 1960s it is perhaps not surprising that the continuing declassifications of large volumes of material have made historians of the Kissinger/Nixon era among the first modern beneficiaries of a legacy of countless mainframes worth of information — with all the attendant promise and peril. As larger and larger archives of this human cultural output are accumulated, historians are beginning to employ other tools and methods — including those developed in other fields, including computational biology and linguistics — to overcome information overload and facilitate new historical interpretations.  To cope with the overload, historians must begin to embrace and employ methods like n-gram mapping, topic modeling and machine learning, methods that have only just begun to penetrate the practice of historical research, and that have thus far been focused primarily on early American and European studies.

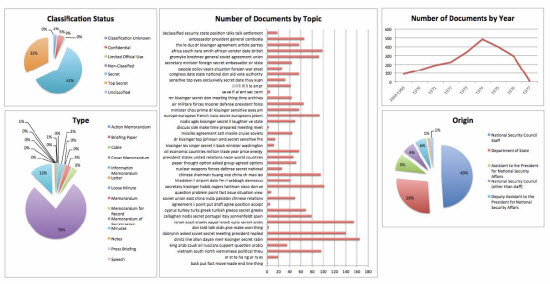

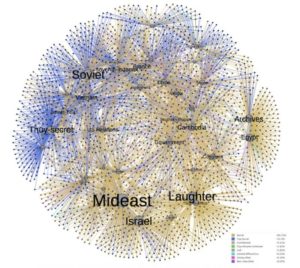

To cope with the overload, historians must begin to embrace and employ methods like n-gram mapping, topic modeling and machine learning, methods that have only just begun to penetrate the practice of historical research, and that have thus far been focused primarily on early American and European studies. This research project (an extension of my first-year doctoral thesis) is an application of big data computational techniques (collectively referred to as Culturomics ) like those employed by Michel (“Culturomics: Quantitative Analysis of Culture Using Millions of Digitized Books”) and Nelson (“Mining the Dispatch”) to the study of diplomatic history. Applying these techniques to the study of twentieth-century diplomatic history to generate useful finding aids for researchers, this project by @mickikaufman is both a contribution to the nascent study of digital humanities and a first effort at Diplonomics.

This research project (an extension of my first-year doctoral thesis) is an application of big data computational techniques (collectively referred to as Culturomics ) like those employed by Michel (“Culturomics: Quantitative Analysis of Culture Using Millions of Digitized Books”) and Nelson (“Mining the Dispatch”) to the study of diplomatic history. Applying these techniques to the study of twentieth-century diplomatic history to generate useful finding aids for researchers, this project by @mickikaufman is both a contribution to the nascent study of digital humanities and a first effort at Diplonomics.