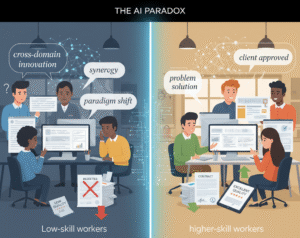

When I analyzed how low-skill freelancers were using large language models (LLMs) on online labor platforms, I expected to see a story of empowerment: one where technology leveled the playing field and allowed those with fewer resources to compete with highly skilled peers. The reality, however, was more complex and, in many ways, more sobering.

Low-skill workers did use LLMs to expand their knowledge into more domains. Their proposals covered a wider range of topics, their vocabulary became more sophisticated, and their writing sounded more confident. But despite this apparent growth, they were less likely to win contracts and their completed projects were consistently rated as lower quality than those of higher-skill workers using the same tools.

This finding reveals something deeper about the relationship between AI and human learning, or what I call the capability illusion. LLMs create the sense of increased competence, but that competence is often superficial. The model helps users access information and generate output that looks expert, but without foundational understanding, they cannot evaluate, refine, or build upon what the model provides. The illusion of mastery replaces the practice of mastery.

This isn’t just a labor market problem; it’s an educational one. If students begin relying on AI before developing core skills, we risk producing a generation that can produce polished answers without understanding the logic behind them. The question for educators, then, is not whether to integrate AI into learning, but how to do it in a way that strengthens human cognition rather than outsourcing it.

Tool Dependency vs. Skill Development

At the heart of this issue is a growing dependency on AI as a shortcut to performance. LLMs make it possible to produce high-quality text, code, or analysis almost instantly, but they also make it easy to skip the slow, often frustrating process of learning. For workers and students alike, this can create the illusion of progress: an increase in output that is not matched by an increase in understanding.

Education, therefore, faces a new dual mandate: to teach both foundational thinking and AI collaboration. The challenge lies in ensuring that one strengthens the other, rather than allowing AI to replace the very processes that build expertise.

Teaching AI Literacy as Judgment

Much of the current discussion around “AI literacy” focuses on prompt engineering: how to phrase a question in a way that elicits better answers. While this skill has practical value, it misses the larger point. True AI literacy is not about generating outputs; it’s about developing judgment.

To be AI literate is to know when to trust the model, when to verify its claims, and when to reject its conclusions entirely. It means understanding not only how to use AI, but also what its limitations are: where it tends to hallucinate, what kinds of biases it reproduces, and how its probabilistic reasoning differs from human logic.

In this sense, AI literacy should look less like programming and more like philosophy: a practice of questioning, verifying, and reflecting on what it means to know something in the age of machine intelligence.

Rethinking Assessment: Measuring Understanding, Not Output

In an AI-mediated world, output alone no longer reflects ability. The line between human and machine contribution is increasingly blurred. To ensure that education continues to measure learning, assessments must evolve to capture how students arrive at their answers, not just what those answers are.

This could take the form of reflective essays, process logs, or oral defenses where students articulate their reasoning independently of AI. The emphasis should shift toward metacognition, the ability to describe one’s thought process, evaluate alternatives, and justify decisions. In other words, we need assessments that reward thinking, not just completion.

The Skilled User Model

High-skill workers in my study used LLMs differently. They didn’t depend on AI to fill gaps; they used it to amplify strengths. They knew where their expertise ended and used the model strategically to expand beyond it by automating routine tasks, exploring new ideas, and synthesizing information faster. Their value came not from resisting AI, but from knowing how to integrate it intelligently.

This is the model education should aim for: the skilled user. The skilled user treats AI as an assistant, not an authority. They leverage the model for efficiency but retain control over interpretation, ethical reasoning, and creative direction. They use AI to enhance cognitive diversity (to test hypotheses, simulate alternatives, and explore new conceptual terrain), but they remain the one doing the actual thinking.

Training students to become skilled users requires more than technical proficiency. It demands self-awareness, critical thinking, and disciplinary depth. It’s about cultivating humans who can collaborate with intelligence that isn’t their own, without losing their own capacity for discernment.

Redefining the Purpose of Education

If AI can already generate essays, debug code, and pass standardized exams, then education’s role cannot simply be to teach students to produce outputs. The value of learning must shift from what students know to how they know, from knowledge reproduction to knowledge interpretation.

This shift requires a new pedagogy. It should treat AI not as a threat, but as a mirror reflecting our own intellectual habits. It challenges both teachers and students to ask harder questions: What does it mean to learn when machines can answer for us? What is the role of struggle, confusion, or curiosity in an age of instant solutions?

Conclusion: Beyond AI Literacy Toward AI Wisdom

Generative AI has already changed how we work, learn, and think. It has democratized access to information while revealing new inequalities in how that information is used. My study shows that low-skill workers gained breadth but lost depth; they could cover more ground but could not build strong foundations. High-skill workers, by contrast, used AI to extend what they already knew, not to replace it.

The lesson for education is clear: the future of learning will not belong to those who use AI the most, but to those who use it wisely. We must move beyond teaching students how to prompt and start teaching them how to think alongside technology. This means thinking critically, creatively, and reflectively.

References

Acemoglu, D., Autor, D., & Hazell, J. (2021). AI and jobs: Evidence from online vacancies. Brookings Papers on Economic Activity, 2021(1), 1–46.

Brynjolfsson, E., Li, D., & Raymond, L. R. (2023). Generative AI at work (Working Paper No. 31161). National Bureau of Economic Research. https://doi.org/10.3386/w31161

Cho, E., & Gao, Q. (2025). Human Capital in the Age of ChatGPT: A Paradox of Depth and Breadth, Working Paper

Kokkodis, M., & Ipeirotis, P. G. (2023). The good, the bad, and the unhirable: Recommending job applicants in online labor markets. Management Science, 69(11), 6969-6987. https://doi.org/10.1287/mnsc.2023.4690